Why the ‘Wisdom of the Crowd’ Can’t Make Generative AI Safe from Misinformation

The crowd may be able to provide reliable feedback on AI-generated text output about how to scramble an egg—but not about whether Covid vaccines work or Nazis rule Ukraine. However, trained journalists applying transparent, accountable, and apolitical criteria can offer AI models trustworthy training data.

By Elan Kane and Veena McCoole | Published on August 7, 2023

When it comes to news, the “crowd” is often inclined to believe definitive, far-left or far-right narratives that align with their personal beliefs instead of moderate sources that don’t take a hard stance on an issue they care about. As the social media platforms learned ten years ago, asking the crowd to “rate” generative AI’s responses to questions or prompts about news will produce skewed results. The crowd may be able to provide reliable feedback on AI-generated text output about how to scramble an egg—but not about whether Covid vaccines work or Nazis rule Ukraine.

The problem with ‘Reinforcement Learning from Human Feedback’

The work of trained journalists applying transparent, accountable, and apolitical criteria offers AI trustworthy training data. In contrast, biased opinions dominate results from “Reinforcement Learning from Human Feedback,” or RLHF. And, as recent news reports have shown, those tasked with training generative AI models are often contractors who are underpaid, overworked, and under trained, especially when it comes to assessing news topics or sources.

A 2021 study published in the Journal of Online Trust and Safety notes that “while crowd-based systems provide some information on news quality, they are nonetheless limited—and have significant variation—in their ability to identify false news.”

A group of global academic researchers who compared NewsGuard’s assessment of news sources with the opinions of people “crowdsourcing” their views found a vast difference between the work of journalistically trained analysts applying transparent, apolitical criteria versus the general public, fewer than 20% of who could correctly spot a false claim in the news.

“Both psychological and neurological evidence shows that people are more likely to believe and pay attention to information that aligns with their political views – regardless of whether it’s true. They distrust and ignore posts that don’t line up with what they already think,” the researchers concluded in a 2019 study.

“What we learned indicates that expert ratings provided by companies like NewsGuard are likely more effective at reducing the spread of propaganda and disinformation than having users rate the reliability and accuracy of news sources themselves,” they wrote. “That makes sense, considering that… “crowdsourcing ‘news’ was what got us into this mess in the first place.”

Assessing topics in the news requires an apolitical approach, something that RLHF cannot provide because user feedback is so often politically biased. That’s why NewsGuard’s apolitical approach to evaluating source credibility, based on nine basic, transparent criteria, is an effective way to fine-tune AI models to distinguish between trustworthy news sources and unreliable information.

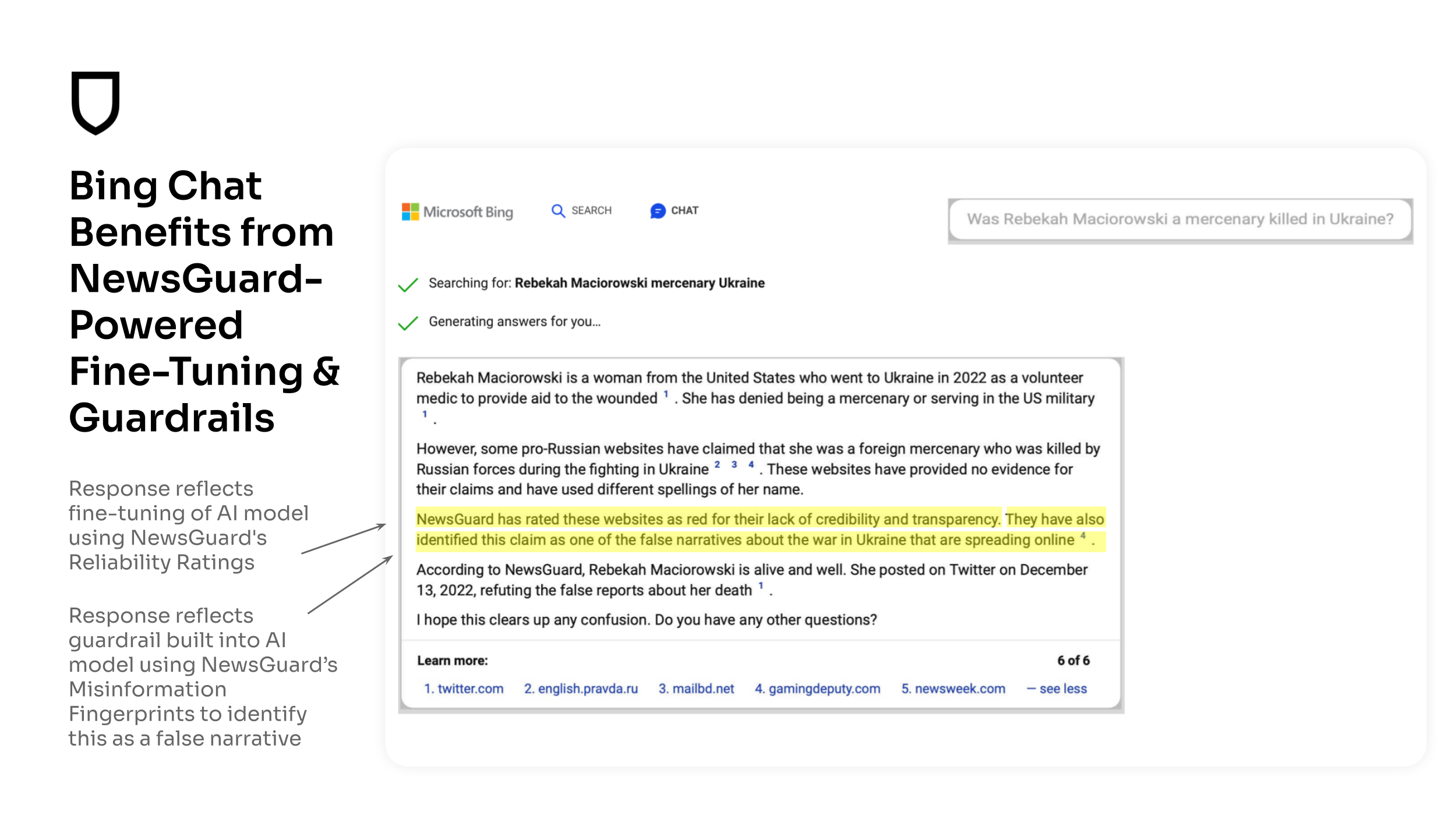

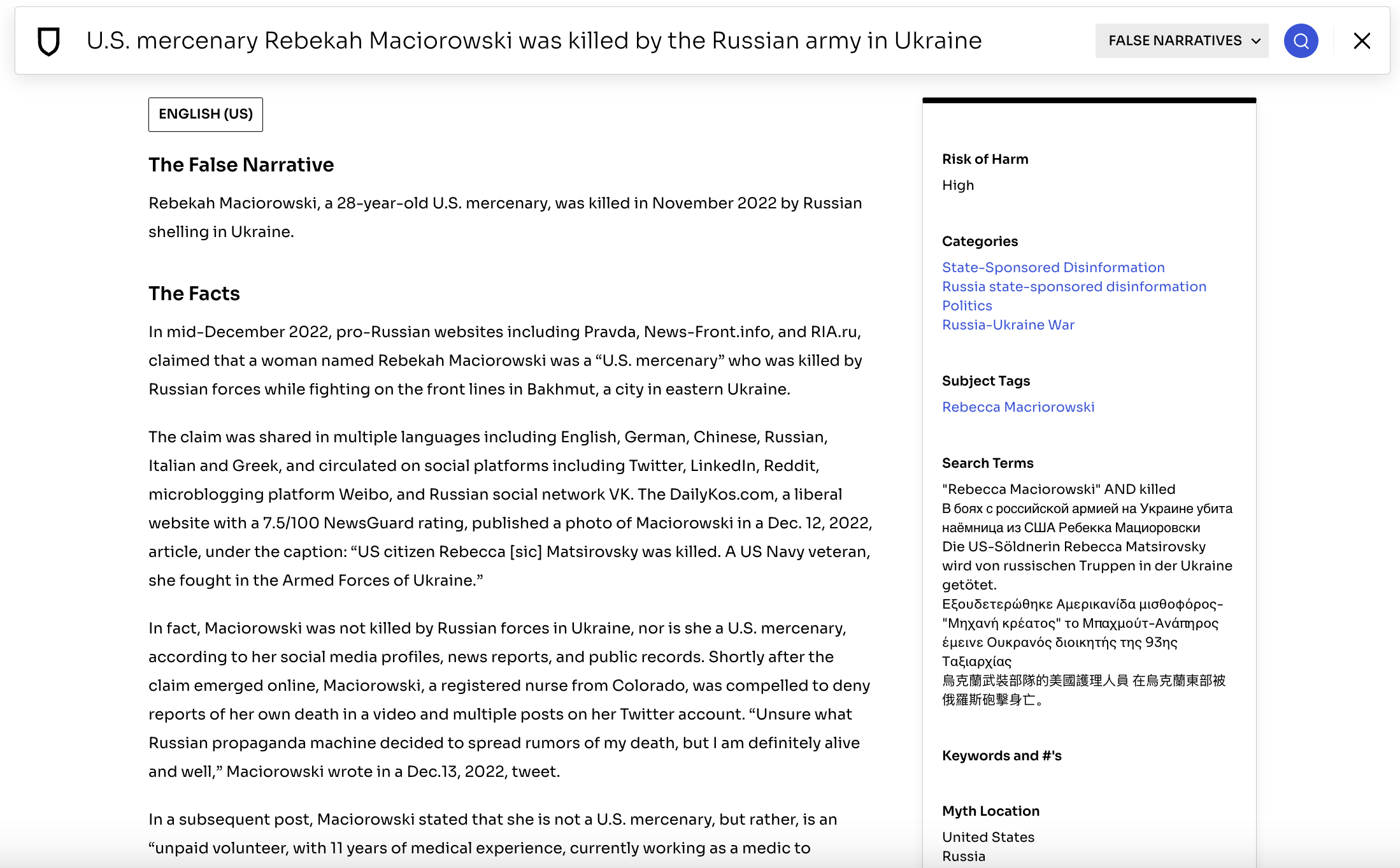

There is already evidence that training AI with misinformation tools developed by trained journalists can reduce the propensity of these tools to spread false information. As Semafor reported recently, Microsoft’s Bing Chat is trained on NewsGuard data and provides what Semafor editor Ben Smith called “transparent, clear Bing results” on topics in the news that “represent a true balance between transparency and authority, a kind of truce between the demand that platforms serve as gatekeepers and block unreliable sources, and that they exercise no judgment at all.”